Welcome to Aproxy Blog

Build knowledge on everything proxies, or pick up some dope ideas for your next project – this is just the right place for that.

DICloak: 2025 Top Antidetect Browser for Multi-Account Management

DICloak Antidetect Browser offers secure and scalable solutions for managing multiple accounts, automating workflows, and enabling seamless team collaboration, all optimised for high performance.

Latest Articles

DICloak: 2025 Top Antidetect Browser for Multi-Account Management

DICloak Antidetect Browser offers secure and scalable solutions for managing multiple accounts, automating workflows, and enabling seamless team collaboration, all optimised for high performance.

CaptchaAI 2025 Review: A Game-Changer for Automated CAPTCHA Solving

In the ever-evolving digital world of 2025, businesses and developers frequently encounter a common obstacle: CAPTCHA. While CAPTCHA systems help websites block bots, they also disrupt legitimate automation efforts and often frustrate users. CaptchaAI eme

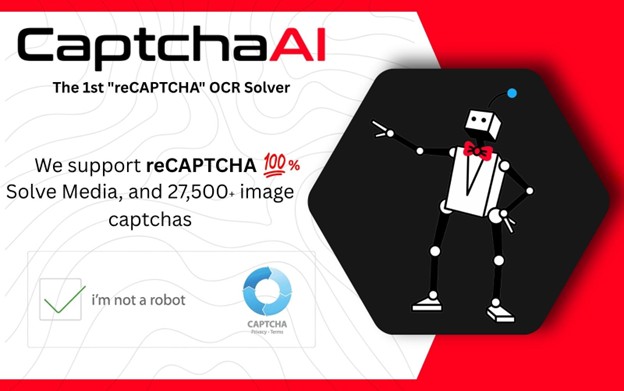

How to Use BrowserScan to Detect Browser Fingerprints

How to Use BrowserScan to Detect Browser Fingerprints

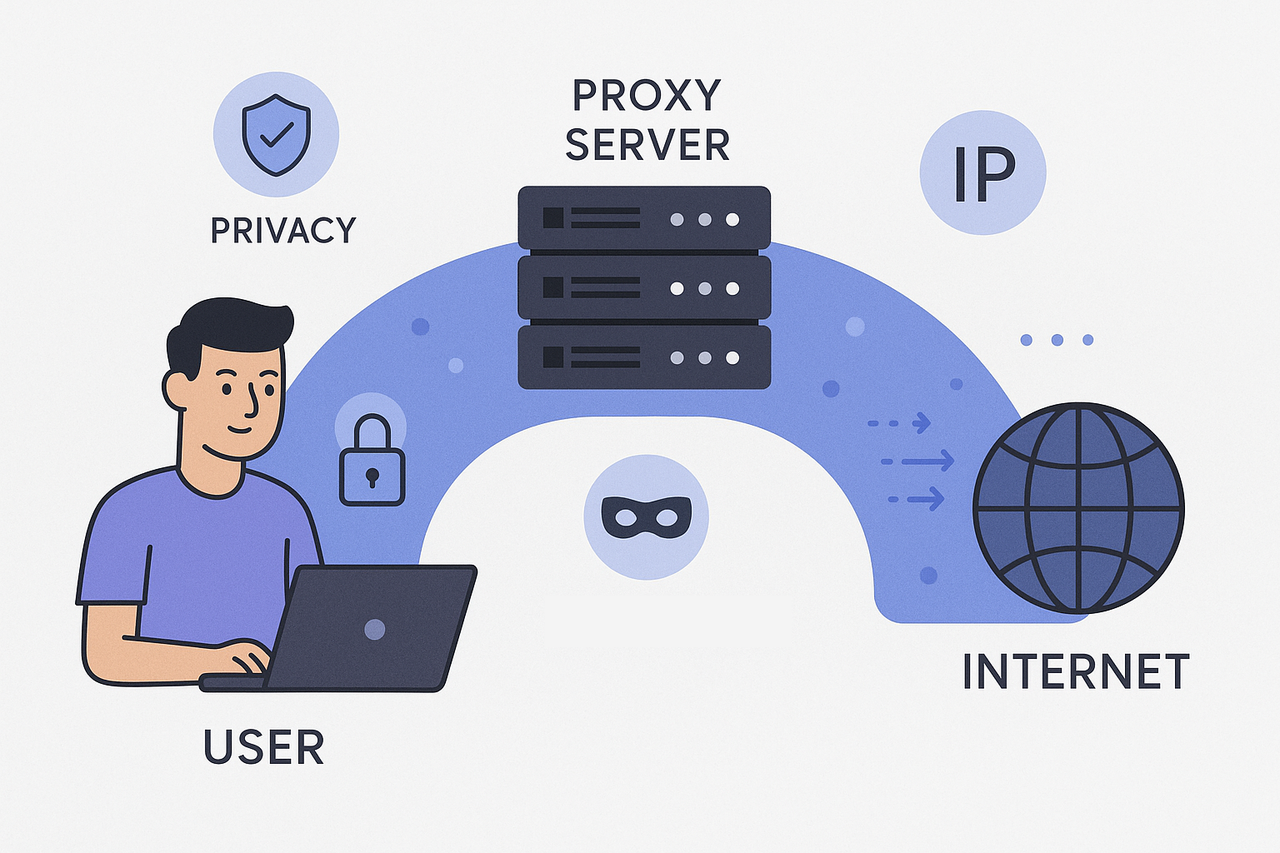

Proxy Definition: What Is a Proxy Server & How It Works

Discover the full proxy definition and how proxy servers enhance privacy, security, and speed. Learn key types, benefits, use cases, and explore trusted solutions at Aproxy.

Proxy Server vs Proxy API: What Developers Should

Discover the key differences between traditional proxy servers and proxy APIs, including performance, implementation, security, and cost considerations to help developers make informed infrastructure decisions.

Debugging Proxy Errors: A Developer’s Guide to Smart Proxy Debugging

Struggling with proxy debugging? Learn how to fix 403 errors, request header issues, and proxy timeouts while working with smart proxies in this practical guide.

Maximizing Reach with Smart Proxies for E-Commerce Ads

Discover how smart proxies for E-Commerce ads can expand your reach while minimizing risks. Learn about proxy user cases and the benefits of residential proxies in global marketing.

Real-Life Example: Using Proxies to Avoid Scraping Bans

Learn how to use proxies to bypass anti-bot measures and avoid detection while web scraping. This in-depth guide covers effective strategies and real-world examples for long-term, successful data extraction.

Unleashing the Power of Proxies for Competitive Intelligence

Unlock competitive intelligence with residential proxies. Track pricing, monitor markets, and gather insights legally—without detection. Perfect for marketers, analysts, and businesses.

How to Use Rotating Proxies for Data Scraping

Scalable data scraping needs rotating proxies to bypass IP blocks, rate limits, and geo-restrictions, ensuring consistent, stealthy access to target websites.

How to Boost TikTok Operations with Proxies IP?

In TikTok operation, Static Residential IP provides a reliable solution for brands and creators to address pain points such as account instability, limited geographical coverage and precise advertisement placement. This paper analyzes its advantages, appl

How to Increase Efficiency with Headless Browsers & Proxies IPs

This paper discusses in detail the important roles of headless browsers and Proxies IPs in web crawling, and describes how to improve the crawling efficiency through the synergistic work of these two technologies. Headless browsers can accelerate the craw

CONTACT US

[email protected]CONTACT US

[email protected]Smart Innovation Technology LimitedUNIT1021, BEVERLEY COMMERCIAL CENTRE, 87-105 CHATHAM ROAD SOUTH, TSIM SHA TSUI, KOWLOON

This website uses cookies to improve the user experience. To learn more about our cookie policy or withdraw from it, please check our Privacy Policy and Cookie Policy.